|

I am an Applied Scientist II at Amazon in Seattle. I completed my Ph.D. in Computer Science from North Carolina State University, under the supervision of Dr. Tim Menzies. My doctoral thesis focused on finding and mitigating algorithmic "bias" in machine learning models. Currently, I am exploring applications of Generative AI, Large Language Models (LLMs), and Graph Neural Networks to address Amazon-scale business problems. Before coming to NC State, I was a full-stack software developer at TCG Digital. I obtained my bachelor's degree in Computer Science from Jadavpur University. I have done research internships at Intel Corporation (Bellevue, WA), IBM T.J. Watson Research Labs (Yorktown Heights, NY), & Amazon (Seattle, WA). |

|

|

|

|

International Conference on Knowledge Discovery and Data Mining (KDD 2024) We present a comprehensive benchmark suite designed to assess the performance of LLMs in identifying and mitigating fraudulent and abusive language across various real-world scenarios. Our benchmark encompasses a diverse set of tasks, including detecting spam emails, hate speech, misogynistic language, and more. We evaluated several state-of-the-art LLMs, including models from Anthropic, Mistral AI, and the AI21 family, to provide a comprehensive assessment of their capabilities in this critical domain. The results indicate that while LLMs exhibit proficient baseline performance in individual fraud and abuse detection tasks, their performance varies considerably across tasks, particularly struggling with tasks that demand nuanced pragmatic reasoning, such as identifying diverse forms of misogynistic language. These findings have important implications for the responsible development and deployment of LLMs in high-risk applications. Our benchmark suite can serve as a tool for researchers and practitioners to systematically evaluate LLMs for multi-task fraud detection and drive the creation of more robust, trustworthy, and ethically-aligned systems for fraud and abuse detection. |

|

IEEE Transactions on Software Engineering (TSE) 2022 FairMask is a model-based extrapolation method that is capable of both mitigating bias and explaining the cause behind it. In our FairMask approach, protected attributes are represented by models learned from the other independent variables (and these models offer extrapolations over the space between existing examples). We then use the extrapolation models to relabel protected attributes later seen in testing data or deployment time. Our approach aims to offset the biased predictions of the classification model by rebalancing the distribution of protected attributes. FairMask can achieve significantly better group and individual fairness (as measured in different metrics) than benchmark methods. |

|

ACM Transactions on Software Engineering and Methodology (TOSEM) 2022 Testing machine learning software for ethical bias has become a pressing current concern. In response, recent research has proposed a plethora of new fairness metrics, for example, the dozens of fairness metrics in the IBM AIF360 toolkit. This raises the question: How can any fairness tool satisfy such a diverse range of goals? This paper shows that many of those fairness metrics effectively measure the same thing. Based on experiments using seven real-world datasets, we find that (a) 26 classification metrics can be clustered into seven groups, and (b) four dataset metrics can be clustered into three groups. Further, each reduced set may actually predict different things. Hence, it is no longer necessary (or even possible) to satisfy all fairness metrics. |

|

ICSE 2022 (Fairware) Semi-supervised learning is a machine learning technique where, incrementally, labeled data is used to generate pseudo-labels for the rest of data (and then all that data is used for model training). In this work, we apply four popular semi-supervised techniques as pseudo-labelers to create fair classification models. Our framework, Fair-SSL, takes a very small amount (10\%) of labeled data as input and generates pseudo-labels for the unlabeled data. To the best of our knowledge, this is the first SE work where semi-supervised techniques are used to fight against ethical bias in SE ML models. |

|

ESEC/FSE 2021 (ACM SIGSOFT Distinguished Paper Award Winner) This paper postulates that the root causes of bias are the prior decisions that affect- (a) what data was selected and (b) the labels assigned to those examples. Our Fair-SMOTE algorithm removes biased labels; and rebalances internal distributions such that based on sensitive attribute, examples are equal in both positive and negative classes. On testing, it was seen that this method was just as effective at reducing bias as prior approaches. Further, models generated via Fair-SMOTE achieve higher performance (measured in terms of recall and F1) than other state-of-the-art fairness improvement algorithms. |

|

ASE 2020 (NIER) Machine learning software is being used in many applications (finance, hiring, admissions, criminal justice) having a huge social impact. But sometimes the behavior of this software is biased and it shows discrimination based on some sensitive attributes such as sex, race, etc. Prior works concentrated on finding and mitigating bias in ML models. A recent trend is using instance-based model-agnostic explanation methods such as LIME to find out bias in the model prediction. Our work concentrates on finding shortcomings of current bias measures and explanation methods. We show how our proposed method based on K nearest neighbors can overcome those shortcomings and find the underlying bias of black-box models. Our results are more trustworthy and helpful for the practitioners. Finally, We describe our future framework combining explanation and planning to build fair software. |

|

ESEC/FSE 2020 Machine learning software is increasingly being used to make decisions that affect people's lives. But sometimes, the core part of this software (the learned model), behaves in a biased manner that gives undue advantages to a specific group of people (determined by sex, race, etc.). In this work, we a)explain how ground-truth bias in training data affects machine learning model fairness and how to find that bias in AI software, b)propose a methodFairwaywhich combines pre-processing and in-processing approach to remove ethical bias from training data and trained model. Our results show that we can find bias and mitigate bias in a learned model, without much damaging the predictive performance of that model. We propose that (1) testing for bias and (2) bias mitigation should be a routine part of the machine learning software development life cycle. Fairway offers much support for these two purposes. |

|

ASE 2019 (LBR Workshop) Machine learning software is increasingly being used to make decisions that affect people's lives. Potentially, the application of that software will result in fairer decisions because (unlike humans) machine learning software is not biased. However, recent results show that the software within many data mining packages exhibit "group discrimination"; i.e. their decisions are inappropriately affected by "protected attributes" (e.g., race, gender, age, etc.). This paper shows that making fairness as a goal during hyperparameter optimization can preserve the predictive power of a model learned from a data miner while also generates fairer results. To the best of our knowledge, this is the first application of hyperparameter optimization as a tool for software engineers to generate fairer software. |

|

ESEC/FSE 2019 Maintaining web-services is a mission-critical task. Any downtime of web-based services means loss of revenue. Worse, such down times can damage the reputation of an organization as a reliable service provider (and in the current competitive web services market, such a loss of reputation causes extensive loss of future revenue). To address this issue, we developed SPIKE , a data mining tool which can predict upcoming service breakdowns, half an hour into the future. |

|

A "hero" project is one where 80% or more of the contributions are made by the 20% of the developers. In the literature, such projects are deprecated since they might cause bottlenecks in development and communication. This paper explores the effect of having heroes in a project, from a code quality perspective. After experimenting on 1100+ GitHub projects, we conclude that heroes are a very useful part of modern open source projects. |

|

ICSE 2019 Diversity, including gender diversity, is valued by many software development organizations, yet the field remains dominated by men. One reason for this lack of diversity is gender bias. In this paper, we study the effects of that bias by using an existing framework derived from the gender studies literature. We adapt the four main effects proposed in the framework by posing hypotheses about how they might manifest on GitHub, then evaluate those hypotheses quantitatively. While our results show that effects are largely invisible on the GitHub platform itself, there are still signals of women concentrating their work in fewer places and being more restrained in communication than men. |

|

Maumita Chakraborty, Sumon Chowdhury, Joymallya Chakraborty, Ranjan Mehera, Rajat Kumar Pal

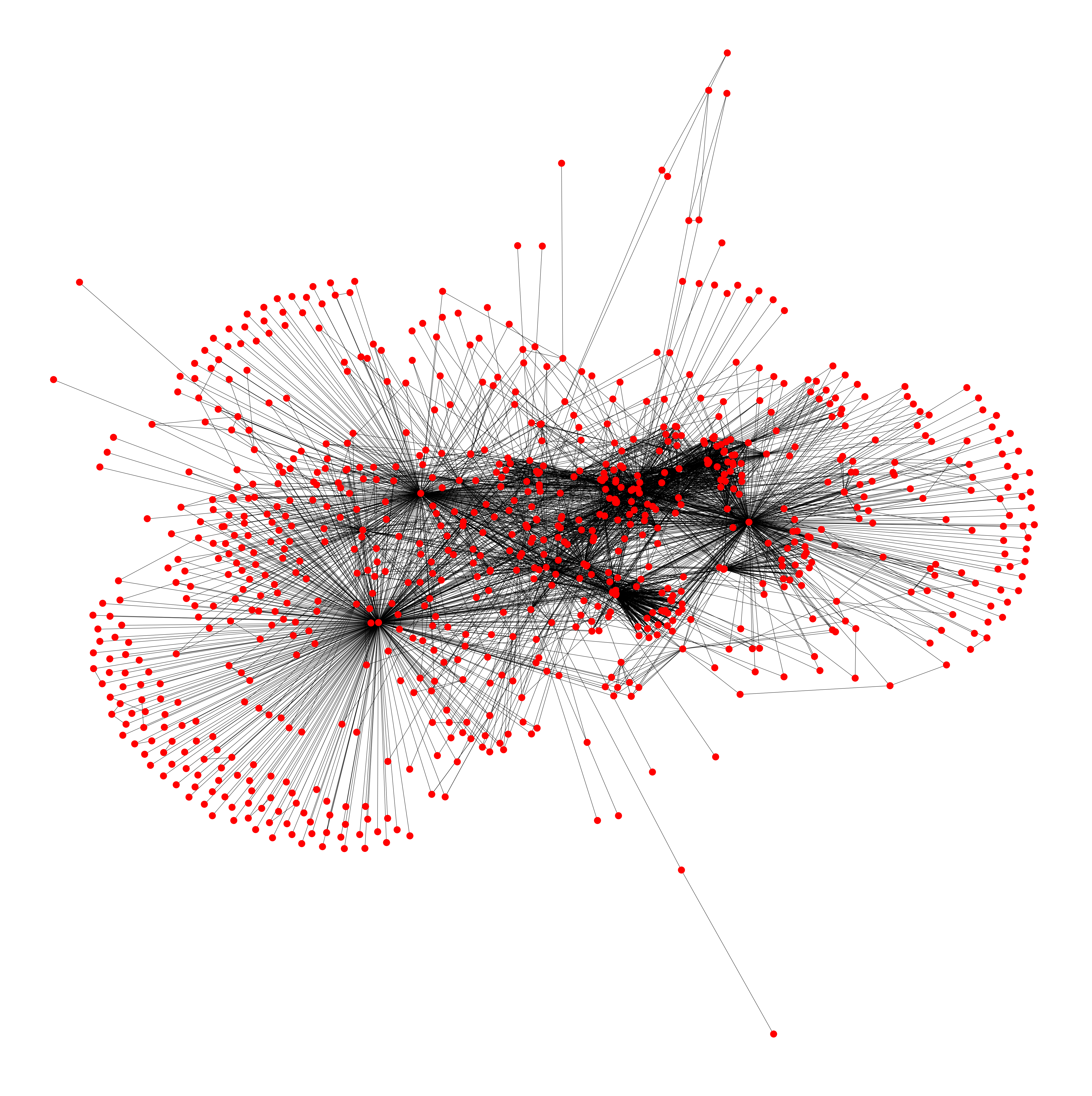

Complex & Intelligent Systems (Springer),2018 Generation of all possible spanning trees of a graph is a major area of research in graph theory as the number of spanning trees of a graph increases exponentially with graph size. Several algorithms of varying efficiency have been developed since early 1960s by researchers around the globe. This article is an exhaustive literature survey on these algorithms, assuming the input to be a simple undirected connected graph of finite order, and contains detailed analysis and comparisons in both theoretical and experimental behavior of these algorithms. |

|

|

|

May 2021 - August 2021 (Seattle,WA) I worked as a member of the Abuse Prevention Science team to find emerging abuse on Amazon Product Detail Page. |

|

June 2020 - August 2020 (Yorktown Heights,NY) I worked on State Management & Persistence in a project called Mono2Micro which converts Monolith applications to Microservices |

|

May 2019 - August 2019 (Bellevue,Seattle) I worked on post-training quantization of ONNX, Tensorflow DL models and Computational Graph Optimization on Onnxruntime.

May 2018 - August 2018 (Bellevue,Seattle) I explored optimization opportunities of .NET Core Garbage Collection and implemented PoC (Proof of Concept) prototypes. The prototypes were then verified against different workloads. |

|

July 2015 - June 2017 (Salt Lake,Kolkata) I was a core developer for two different projects. I designed and developed a B2B Travel Search Engine. I was responsible for implementing middleware services and integrating those with the front end. For the second project, I designed & implemented an intelligence software to retrieve, analyze, transform and report data for business intelligence. It allows users to create different dashboards using its own customizable visualization. It also features advanced analytics concepts like data modelling, forecasting, & determining product affinity. |

|

|

|

I attended the 34th IEEE/ACM International Conference on Automated Software Engineering (ASE 2019) in San Diego, California. I presented a poster on the Late Breaking Results Section. The poster is about the short paper I submitted Software Engineering for Fairness: A Case Study with Hyperparameter Optimization . |

|

I attended the 27th ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering ESEC/FSE 2019 in Tallinn, Estonia and presented two papers there. The first paper TERMINATOR: Better Automated UI Test Case Prioritization is related to Testcase prioritization and second paper Predicting Breakdowns in Cloud Services (with SPIKE) is related to Cloud Computing. |

|

|

|

|

|

|